Amazon Bedrock Integration

Amazon Bedrock is a fully managed AWS service that lets you use foundation models and custom models to generate text, images, and audio.

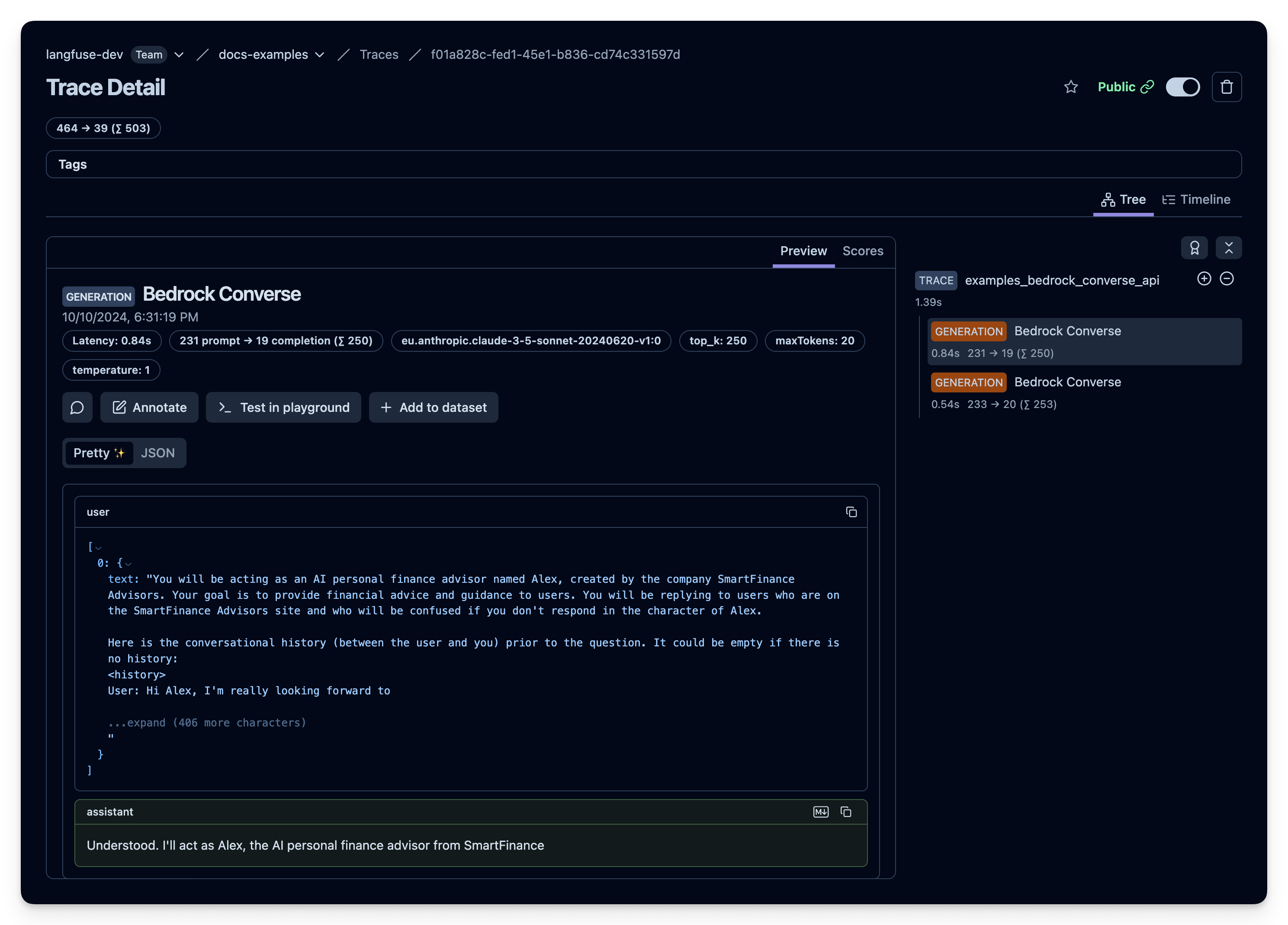

When using Langfuse with Amazon Bedrock, you can easily capture detailed traces and metrics for every request, giving you insights into the performance and behavior of your application.

All in-ui Langfuse features next to tracing (playground, llm-as-a-judge evaluation, prompt experiments) are fully compatible with Amazon Bedrock – just add your Bedrock configuration in the project settings.

Integration Options

There are a few ways through which you can capture traces and metrics for Amazon Bedrock:

-

via an application framework that is integrated with Langfuse:

-

via a Proxy such as LiteLLM

-

via wrapping the Bedrock SDK with the Langfuse Decorator (see example below)

How to wrap Amazon Bedrock SDK (Converse API)

# install requirements

%pip install boto3 langfuse awscli --quietAuthenticate AWS Session

Sign in with your AWS Role that has access to Amazon Bedrock.

AWS_ACCESS_KEY_ID="***"

AWS_SECRET_ACCESS_KEY="***"

AWS_SESSION_TOKEN="***"

import boto3

# used to access Bedrock configuration

bedrock = boto3.client(

service_name="bedrock",

region_name="eu-west-1",

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY,

aws_session_token=AWS_SESSION_TOKEN

)

# used to invoke the Bedrock Converse API

bedrock_runtime = boto3.client(

service_name="bedrock-runtime",

region_name="eu-west-1",

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY,

aws_session_token=AWS_SESSION_TOKEN

)# Check which models are available in your account

models = bedrock.list_inference_profiles()

for model in models["inferenceProfileSummaries"]:

print(model["inferenceProfileName"] + " - " + model["inferenceProfileId"])EU Anthropic Claude 3 Sonnet - eu.anthropic.claude-3-sonnet-20240229-v1:0

EU Anthropic Claude 3 Haiku - eu.anthropic.claude-3-haiku-20240307-v1:0

EU Anthropic Claude 3.5 Sonnet - eu.anthropic.claude-3-5-sonnet-20240620-v1:0

EU Meta Llama 3.2 3B Instruct - eu.meta.llama3-2-3b-instruct-v1:0

EU Meta Llama 3.2 1B Instruct - eu.meta.llama3-2-1b-instruct-v1:0Set Langfuse Credentials

import os

# Get keys for your project from the project settings page

# https://cloud.langfuse.com

os.environ["LANGFUSE_PUBLIC_KEY"] = ""

os.environ["LANGFUSE_SECRET_KEY"] = ""

os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com" # 🇪🇺 EU region

# os.environ["LANGFUSE_HOST"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region

# Your openai key

os.environ["OPENAI_API_KEY"] = ""Wrap Bedrock SDK

from langfuse.decorators import observe, langfuse_context

from botocore.exceptions import ClientError

@observe(as_type="generation", name="Bedrock Converse")

def wrapped_bedrock_converse(**kwargs):

# 1. extract model metadata

kwargs_clone = kwargs.copy()

input = kwargs_clone.pop('messages', None)

modelId = kwargs_clone.pop('modelId', None)

model_parameters = {

**kwargs_clone.pop('inferenceConfig', {}),

**kwargs_clone.pop('additionalModelRequestFields', {})

}

langfuse_context.update_current_observation(

input=input,

model=modelId,

model_parameters=model_parameters,

metadata=kwargs_clone

)

# 2. model call with error handling

try:

response = bedrock_runtime.converse(**kwargs)

except (ClientError, Exception) as e:

error_message = f"ERROR: Can't invoke '{modelId}'. Reason: {e}"

langfuse_context.update_current_observation(level="ERROR", status_message=error_message)

print(error_message)

return

# 3. extract response metadata

response_text = response["output"]["message"]["content"][0]["text"]

langfuse_context.update_current_observation(

output=response_text,

usage_details={

"input": response["usage"]["inputTokens"],

"output": response["usage"]["outputTokens"],

"total": response["usage"]["totalTokens"]

},

metadata={

"ResponseMetadata": response["ResponseMetadata"],

}

)

return response_textRun Example

# Converesation according to AWS spec including prompting + history

user_message = """You will be acting as an AI personal finance advisor named Alex, created by the company SmartFinance Advisors. Your goal is to provide financial advice and guidance to users. You will be replying to users who are on the SmartFinance Advisors site and who will be confused if you don't respond in the character of Alex.

Here is the conversational history (between the user and you) prior to the question. It could be empty if there is no history:

<history>

User: Hi Alex, I'm really looking forward to your advice!

Alex: Hello! I'm Alex, your AI personal finance advisor from SmartFinance Advisors. How can I assist you with your financial goals today?

</history>

Here are some important rules for the interaction:

- Always stay in character, as Alex, an AI from SmartFinance Advisors.

- If you are unsure how to respond, say "I'm sorry, I didn't quite catch that. Could you please rephrase your question?"

"""

conversation = [

{

"role": "user",

"content": [{"text": user_message}],

}

]

@observe()

def examples_bedrock_converse_api():

responses = {}

responses["anthropic"] = wrapped_bedrock_converse(

modelId="eu.anthropic.claude-3-5-sonnet-20240620-v1:0",

messages=conversation,

inferenceConfig={"maxTokens":500,"temperature":1},

additionalModelRequestFields={"top_k":250}

)

responses["llama3-2"] = wrapped_bedrock_converse(

modelId="eu.meta.llama3-2-3b-instruct-v1:0",

messages=conversation,

inferenceConfig={"maxTokens":500,"temperature":1},

)

return responses

res = examples_bedrock_converse_api()

for key, value in res.items():

print(f"{key.title()}\n{value}\n")Anthropic

Understood. I'll continue to act as Alex, the AI personal finance advisor from SmartFinance Advisors, maintaining that character throughout our interaction. I'll provide financial advice and guidance based on the user's questions and needs. If I'm unsure about something, I'll ask for clarification as instructed. How may I assist you with your financial matters today?

Llama3-2

Hello again! I'm glad you're excited about receiving my advice. How can I assist you with your financial goals today? Are you looking to create a budget, paying off debt, saving for a specific goal, or something else entirely?

Can I monitor Amazon Bedrock cost and token usage in Langfuse?

Yes, you can monitor cost and token usage of your Bedrock calls in Langfuse. The native integrations with LLM application frameworks and the LiteLLM proxy will automatically report token usage to Langfuse.

If you use the Langfuse decorator or the low-level Python SDK, you can report token usage and (optionally) also cost information directly. See example above for details.

You can define custom price information via the Langfuse dashboard or UI (see docs) to adjust to the exact pricing of your models on Amazon Bedrock.

Additional Resources

- langfuse-genaiops Notebook maintained by the AWS team including a collection of AWS-specific examples.

- Metadocs, Monitoring your Langchain app’s cost using Bedrock with Langfuse, featuring Langchain integration and custom model price definitions for Bedrock models.

- Self-hosting guide to deploy Langfuse on AWS.